Software called Hadoop is popularly used to handle a large volume of data which is introduced by the apache foundation. In recent days, the growth of Hadoop projects is increasing more due to several advantages. It is mainly used to support distributed data storage and bulk data computation. Also, it is an open-source service and supports the MapReduce programming model. Furthermore, it manages large computer networks and clusters. This article is specially designed for providing useful research information on Hadoop Projects for Final Year Students!!!

Hadoop Architecture

Generally, the Hadoop architecture incorporates two main components such as

- Hadoop Distributed File System (HDFS)

- MapReduce

As well, the supportive MapReduce engines are YARN/MR2 and Mapreduce/ MR1. As a result, it brings several benefits such as reliability, cost-efficiency, and scalability. Then, the cluster of Hadoop is composed of multiple slave nodes and one master node. Here, the master node includes the NameNode, Task Tracker, Job tracker, and Data node. Then, the slave nodes include Task Tracker and Data Node.

Now, we can see about three main modules of the Apache Hadoop. The Apache Hadoop offers multiple ranges of standard utilizes and solutions to produce cluster resource control, high throughput analysis, parallel data processing, and cluster resource management. The main modules that are supported by the software are listed here for your reference.

Hadoop Modules List

- Hadoop YARN

- Hadoop MapReduce

- HDFS

When working with a large volume of data, each module is associated with some technical constraints. For illustration purposes, here we have taken “Hadoop MapReduce” as an example and itemized their associated technical issues on implementation.

Hadoop MapReduce Projects

- Distribute Intermediate Files Location by Master to Local Nodes

- When performing maps task, simultaneously more intermediate files generate. Then the locations have to be notified to other nodes after storing

- Once Map Tasks Over, Start Reduce Tasks

- When all the available maps tasks are completed successfully, reduce tasks begin

- Varied Data

- Data is produced from various sources in various data types

- Data Locality-based Task Schedules

- When the computations are going to perform, the location needs to be given for resource provisioning

- Re-Execute MapReduce Task (if Map fails before reduced completion)

- When the master node is corrupted, the entire Mapreduce Task will be collapsed. Then, the task will be again reassigned to another master node

- Midway Data

- More number of intermediate files generation

Moreover, we have also given you two primary challenges of Hadoop. Most importantly, our researchers have the best research solutions for these challenges. Once you connect with us, we are ready to share our proposed techniques and algorithms. Similarly, we also support you in other major challenges. We assure you that we provide the best-fitting solutions for any sort of challenging research issue.

Hadoop Research Topics

- Insufficient Tools

- Handling huge data is a difficult task to perform in a conventional tool

- So, it requires standardized tools to execute all levels of data processing from data collection to visualization in high-quality

- Data Security

- One of the main challenges of Hadoop is data security

- Since it involves huge-scale data from various sources

- For instance – Kerberos authentication protocol

Next, we can see the different kinds of real-time applications using Hadoop. If you need more research topics related to real-world scenarios, then communicate with us. We fulfill your requirements in your desired research areas. We assure you that our proposed topics are surely up-to-date with a high degree of future research scope. Overall, we support you in both real-time and non-real-time Hadoop projects for final year students.

Top 3 Research Ideas Hadoop Projects for Final Year Students

- Cost-based Data and Activity

- Efficient Resource Distribution in Low-cost Applications

- Patient Health Data and Behavior

- Patient Health Monitoring System based on Continuous Behavior and Medical Prescription

- Medical Data Analysis

- Medical Image Investigation using Imaging Informatics

- Diseases Prediction using Clinical Trials in Bio-Informatics

Operating System for Hadoop

The majority of our Hadoop projects for final year students are demanded in windows (all versions). In addition, our experts will develop projects in the following areas,

- SUSE Linux

- CentOS platforms

- Linux & Ubuntu

Since, these platforms can be easily integrated with AWS, Python, Spark, MATLAB, etc. Further, we also support many development tools for Hadoop projects. As well, some of them are given below for your reference. If you are interested in some other tool, then please let us know to support you in your desired tool.

Hadoop Integration with Other Tools

- NoSQL

- Couchbase

- Cassandra

- MongoDB

- Data Fusion

- Flume

- Sqoop

- Visualization

- R

- Tableau

- Sas

- ETL (Extract / Translate / Load)

- Talend

- Pentaho

- Informatica

In addition, we have also given you a few significant languages that are used for Hadoop projects developments. These languages are more useful for beginners like final year students for easy coding. Also, these languages are considered developer-friendly languages due to their high-end benefits over the implementation phase. Our developers have sufficient practice in developing complex applications. So, we are ready to develop a variety of applications at any level of complexity.

Best Hadoop Programming Languages

- C

- Python

- Java

- Scala

- Shell Script

Already, we have seen about Hadoop integrated tools and technologies. To the continuation, now we can see about the big data development tools that are close to Hadoop. Similar to Hadoop tools, our developers have sufficient practice in the following tools also. Therefore, we accurately suggest you appropriate development tool based on your project needs. Further, we are also capable to develop your Hadoop projects for final year students with precise and satisfactory results by smart techniques.

List of Big Data Tools Similar to Hadoop

- BigQuery

- Pachyderm

- Apache Storm

- Apache Mesos

- Apache Flink

- And also Apache Spark

For illustration purposes, here we have cherry-picked “Apache spark” from the above list. In this, we have mentioned the best result-yielding clustering techniques which give reliable results in Apache Spark. Likewise, we provide keen assistance on every operation of your handpicked Hadoop projects for final year students. Once you create a bond with us, we will take whole responsibilities of your Hadoop project till reaching the best experimental results.

Big Data Clustering Techniques

- Density-based Clustering

- K-Means Clustering

- Hierarchical Clustering

Next, we can see the datasets of the Hadoop projects. Since the majority portion of the project result is dependent on the datasets. Particularly in the Hadoop projects, operations deal with data management and storage. So, we take more concern on datasets for all sorts of Hadoop projects. Here, we have given you a few widely used Hadoop datasets for your reference. Similarly, we also recommend you in other datasets based on your project requirements and intentions.

Datasets for Hadoop Projects

- Amazon

- AWS involves a large dataset

- Provide dataset by Amazon for Hadoop

- Clearbits.net

- Provide datasets of overall data of stack exchange

- Dataset Size – 10 GB

- AWS Public datasets

- Provide large datasets by AWS platform

- Open source dataset

- Wikipedia

- Provide big–scale real-time data for Hadoop practice

- Grouplens.Org

- Provide a large dataset for live examples

- University of Waikato

- Provide top-quality dataset for the machine learning process

- LinkedData

- Provide different categories of datasets

- RDM

- Provide huge-scale datasets for the free practice of Hadoop

- ICS

- Provide the vast number of the dataset which is nearly 180+

- ClueWeb09

- Provide about 1 billion web pages ranging from Jan and Feb 09

- Dataset Size – 5TB

Further, we have also given you the list of Hadoop configuration parameters. When the Hadoop project topic is confirmed, then it is required to predict hardware requirements. This may be the initial step of your project development.

Hadoop Configuration Parameters

- Cores per Node – 20

- Total Nodes – 66

- RAM per Node – 128 GB

- CPU – Intel Xeon E5 2670 v2 @ 2.5 GHz

In the case of a business-based Hadoop environment, it is essential to get the experts’ advice for analyzing data storage demand. Since Hadoop always handles dynamically changing big data. To make you aware of the Hadoop environment configuration, here we have listed the fundamental requirements for a cluster-based environment. For all our handhold Hadoop projects, we give suggestions on apt Hadoop environment configuration and parameters based on project needs.

Hadoop Environment Configuration

- Rate of compression – 3%

- Intermediate MapReduce outcome – 1x hard disk

- Data storage size – 600 TB

- Space usage of 60 % – 70 % – hard drive (depends on need)

- Machine overhead (logs, OS, etc.) – at least 2 disks

- Replication factor – 3x replicas (default)

- Future data enhancement – data trending analysis

Now, we can see the performance of Hadoop projects. If the generated input data has high volume, greater velocity, and varied format, then it may be difficult to process in a single node. At that time, the group of nodes called clusters will help to manage the data better than other methods. So, Hadoop is more useful in handling massive data regardless of data format, size, value, structure, etc. Here, we have given you two primary ways to measure the Hadoop model performance.

How to measure the performance of Hadoop?

- Measure Hadoop performance by using two main tools such as performance monitor and load generator

- Also, use the monitors that are flexible to measure below quantities

- Network packet’s response time

- Count of executed instructions (by the processor)

- Order of multiprogramming (by timesharing system)

Moreover, we also included some output parameters that were used to measure the efficiency and performance of the Hadoop system. Our developers are proficient enough to enhance the system performance by adjusting the parameters in the designing and development phases. Further, we also suggest appropriate parameters based on your project requirements. Then, the measurement of these parameters is represented in a pictorial format i.e. graph.

Output Parameters for Hadoop

- Spitted Records

- Combine Input Records

- Map Input Records

- Reduce Input Records

- Combine Output records

- Map Output Records

- Reduce Output Records

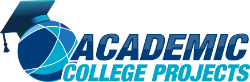

- CPU Time Spent

We hope that you have a clear idea of the development of the Hadoop project in different aspects like implementation tools, programming languages, datasets, configuration parameters, etc. Now, we can see the list of top-trending research areas of Hadoop in the research field. These areas have a vast number of research ideas based on current developments and as well, our ideas are new to this world to create work of genius.

Important Hadoop Research Areas

- Data Mining

- Big Data Analytics

- Green Computing

- Mobile-Cloud Computing (MCC)

- Sensor and Ad-Hoc Network

- Internet of Things (IoT)

- Software-Defined Network (SDN)

Next, we can see the growing technologies of Hadoop. All these trends are recognized as key aspects to make Hadoop projects more popular in real-world applications. Further, if you are curious to know other evolving research areas and trends then connect with us. We are here to support you in all possible aspects to satisfy your needs. So, come with your interesting ideas or areas to know more innovations in your selected areas.

Emerging Trends in Hadoop

- Metadata Management

- Large-Scale Mixed Data Integration

- Face Emotion Detection using Sentiment analysis

- Online Fraudulent Activities Recognition

- Live Drug Response Detection and Investigation

- Secure Healthcare Data Distribution from Remote Location

- Query-based Searching and Information Retrieval

Last but not least, now we can see about the current interest of final year students in Hadoop-oriented projects. All these project ideas are suggested by our experts based on the demands of our handhold final year students. Beyond this list of project ideas, we also have other exciting Hadoop projects topics. Therefore, make a bond with us to avail more benefits from us for your final year Hadoop projects.

Top 4 Hadoop Projects for Final Year Students

- Infrastructure

- New Component Design

- Hbase, Hive, and Pig

- Mapreduce

- Data Flow Monitoring

- Resource Provisioning

- Scheduling

- Data Manipulation and Storage

- Indexing

- Database Management System

- Random Access

- Replication and Storage

- Cloud Storage

- Queries

- Cloud Computing

- Others

- Cryptosystem and Data Security

- Energy Management

On the whole, we provide the finest Hadoop project ideas and topics in your interested areas for your best Hadoop project. Also, we provide documentation/dissertation service in Hadoop projects for final year students. Finally, we provide supplementary materials such as project screenshots, running video, running guidelines, software installation instruction, etc. at the time of delivery. So, we believe that you hold your hands with us for the best Hadoop project development.