Establish Hadoop Projects with skilled professionals.

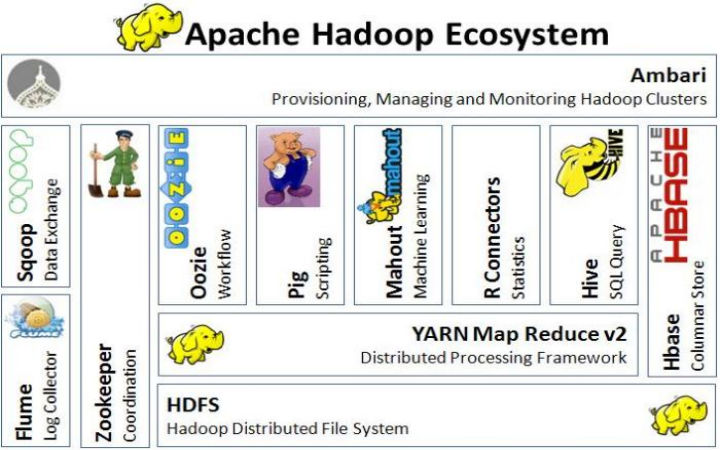

What is hadoop? Hadoop is open source software framework built in java program for distributed storage and processing data on computer cluster environment. .Massive amount of data could be effectively stored under Hadoop platform. Problems faced in big data projects can be solved using hadoop concepts. Hadoop projects is done by our concern in cloud domain for computer science and information technology, hadoop projects for final year students and research scholars. We Provide hadoop project topics from reputed journals an IEEE, ACM, SCOPUS, SPRINGER . Hadoop Projects core concept can be divided into two blocks. Storage part carried by HDFS ( Hadoop distributed file systems) and Processing Part carried by MapReduce. Hadoop Projects PDF.

Hadoop distributed file system:

Unlimited storage for programmer is supported under Hadoop distributed file system or HDFS.Benefits of HDFS are

- Fault tolerance.

- Horizontal scalability.

- Commodity hardware.

Hadoop MapReduce:

Large amount of data could be accessed under Hadoop Projects. It is a software framework and a programming model. Vast data in parallel and commodity hardware could be simplified by MapReduce framework. Fault tolerant data could be processed by the commodity hardware in mapreduce. Thousand of nodes in a network could be accessed by peta bytes of data. In structured and unstructured data computation processes occur. Structured data are referred as database so Hadoop are known as file system.

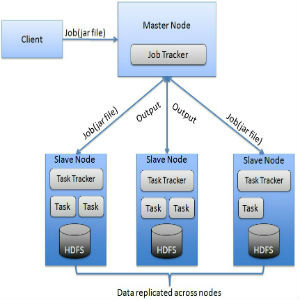

Hadoop MapReduce framework:

Two fundamental process are carried under map reduce framework they are

Mapping:

Master node takes out large problem input and divides it into smaller sub problems and gives away to worker nodes. Smaller problem are solved by worker and handed to master node in Hadoop Projects.

Reducing:

To sub problem the answers are taken by the master node and links them in a predefined way to derive answer to the original problem. Intermediate values are taken and low down to smaller solution.

Hadoop cluster architecture

Various nodes are present in hadoop cluster architecture. Hadoop cluster can classified in to master node and slave node.Master nodes to process large amount of data in cloud environment. Slave nodes in Hadoop cluster are contained for data processing in server side in Hadoop Projects.

Types of Master nodes.

- Name node : HDFS layer use name node to allocate names.

- Task tracker node: Individual task to individual node.

- Data node: HDFS layer use data node to allocate data.

- Job tracker node: For each machine job scheduled could be identified in a cluster

Types of slave node.

- Data node: HDFS layer use data node to allocate data.

- Task tracker node:Map reduce layer is carried under task tracker node in Hadoop Projects.

Applications of Hadoop Projects

Their dedication towards the work is extra ordinary. All materials are provided for project implementation. Thanks a lot for support.

Developers trained students to showcase the both theoretical and practical knowledge of projects. Clarified my doubts in a detailed way with more intense care.

I got enrich learning experience in my projects by the team member guidance. They describe every concept in a diagrammatic way for better understanding.

I use this opportunity to thanks the team members for complete guidance for my projects.